SAM featured in Scientific American

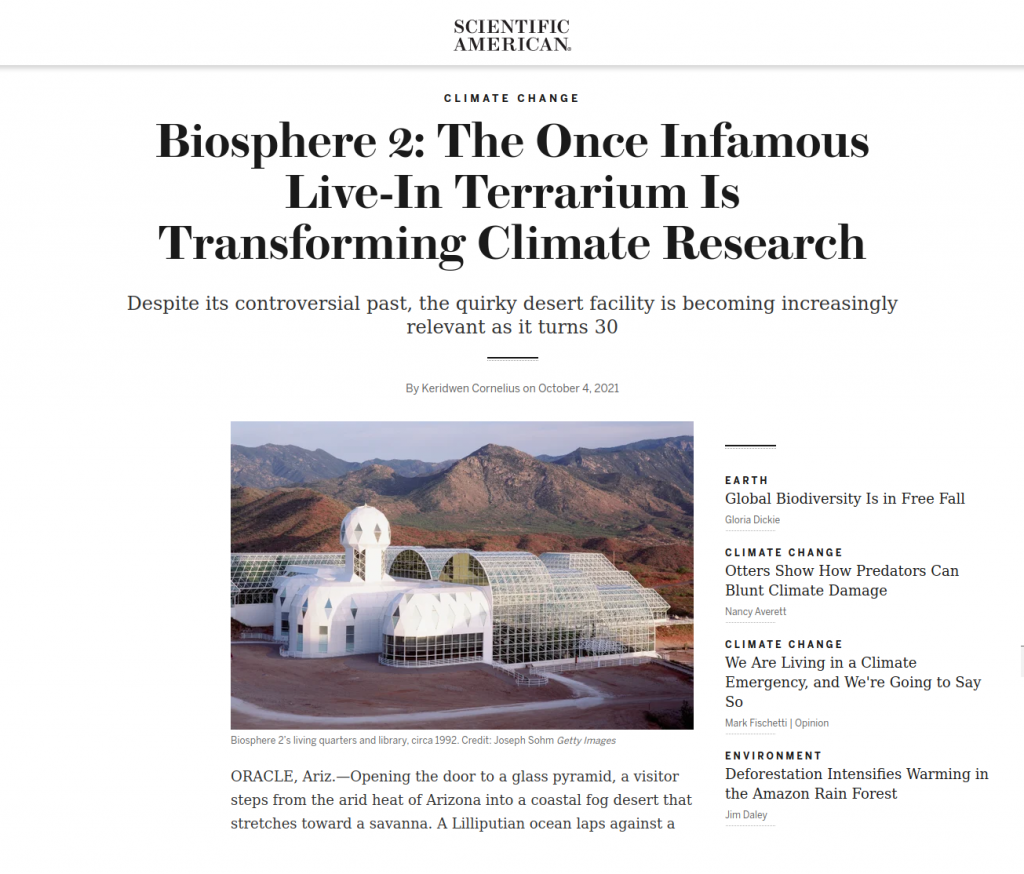

Biosphere 2: The Once Infamous Live-In Terrarium Is Transforming Climate Research, October 4, 2021

by By Keridwen Cornelius for Scientific American

“The Space Analog for the Moon and Mars (SAM) ‘is very much, at a scientific level and even a philosophical level, similar to the original Biosphere,’ says SAM director Kai Staats. Unlike other space analogues around the world, SAM will be a hermetically sealed habitat. Its primary purpose will be to discover how to transition from mechanical methods of generating breathable air to a self-sustaining system where plants, fungi and people produce a precise balance of oxygen and carbon dioxide.”